We’ve all been there. It’s 3 AM, and the pagers are screaming. A critical service is down, customers are impacted, and the on-call team is scrambling through logs and dashboards, trying to piece together a puzzle in the dark. The postmortem later reveals the cause: a rare, cascading failure triggered by a minor network blip—a scenario no one had anticipated, let alone tested for. The classic refrain echoes in the war room: “But it worked perfectly in staging!”

This is the reality of modern distributed systems. Their complexity creates a near-infinite number of potential failure modes, many of which are impossible to predict. Traditional testing helps, but it primarily validates known paths and expected behaviors. To build truly resilient systems, we need to go a step further. We need to embrace failure, not just react to it. This is the world of Chaos Engineering: the discipline of experimenting on a system to build confidence in its capability to withstand turbulent conditions in production.

This guide will take you on a practical journey through Chaos Engineering. We’ll explore why it’s essential, how to conduct experiments safely, what tools to use, and, most importantly, how to foster a culture that views controlled failure as a powerful engine for building unbreakable systems.

From Fragility to Antifragility: The ‘Why’ of Chaos Engineering

The term “Chaos Engineering,” coined by Netflix, can be intimidating. It conjures images of recklessly pulling plugs in a data center. The reality is far more scientific and controlled. It is a methodical, empirical approach to uncovering systemic weaknesses before they manifest as production outages.

Beyond Traditional Testing

Unit, integration, and end-to-end tests are fundamental to software quality. They verify that individual components and entire workflows function as designed under ideal conditions. However, they fall short in a few key areas when it comes to distributed systems:

- They test for knowns: You write a test for a failure you can already imagine. What about the “unknown unknowns”—the emergent, non-obvious failure modes that arise from the complex interplay of microservices, networks, and cloud infrastructure?

- They miss environmental factors: Staging environments rarely, if ever, perfectly replicate the scale, traffic patterns, and noisy-neighbor effects of production.

- They don’t account for cascading failures: A single, seemingly minor issue—like increased latency in a downstream dependency—can ripple through the system, causing a catastrophic, system-wide failure.

Chaos Engineering directly addresses these gaps by deliberately injecting real-world failures into our systems to see how they respond. It’s not about breaking things; it’s about revealing hidden assumptions and design flaws in our resilience strategies.

The Core Principles of Chaos Engineering

At its heart, Chaos Engineering is a form of empirical science applied to software systems. The official Principles of Chaos outline a clear methodology:

- Define a ‘Steady State’: Start by defining a measurable, quantifiable metric that represents the normal, healthy behavior of your system.

- Formulate a Hypothesis: Articulate a clear hypothesis about how the system will behave when a specific failure is introduced. The hypothesis should be that the steady state will be maintained.

- Introduce Real-World Variables: Inject failures that mirror real-world events, such as server crashes, network latency, or API dependency failures.

- Attempt to Disprove the Hypothesis: Run the experiment and carefully observe the system’s steady state. The goal is to find deviations from your hypothesis, as this is where the learning occurs.

Think of Chaos Engineering as a vaccine for your production environment. You inject a small, controlled amount of a “virus” (a failure) to stimulate the system’s “immune response” (resiliency mechanisms), making it stronger and better prepared for a real-world infection.

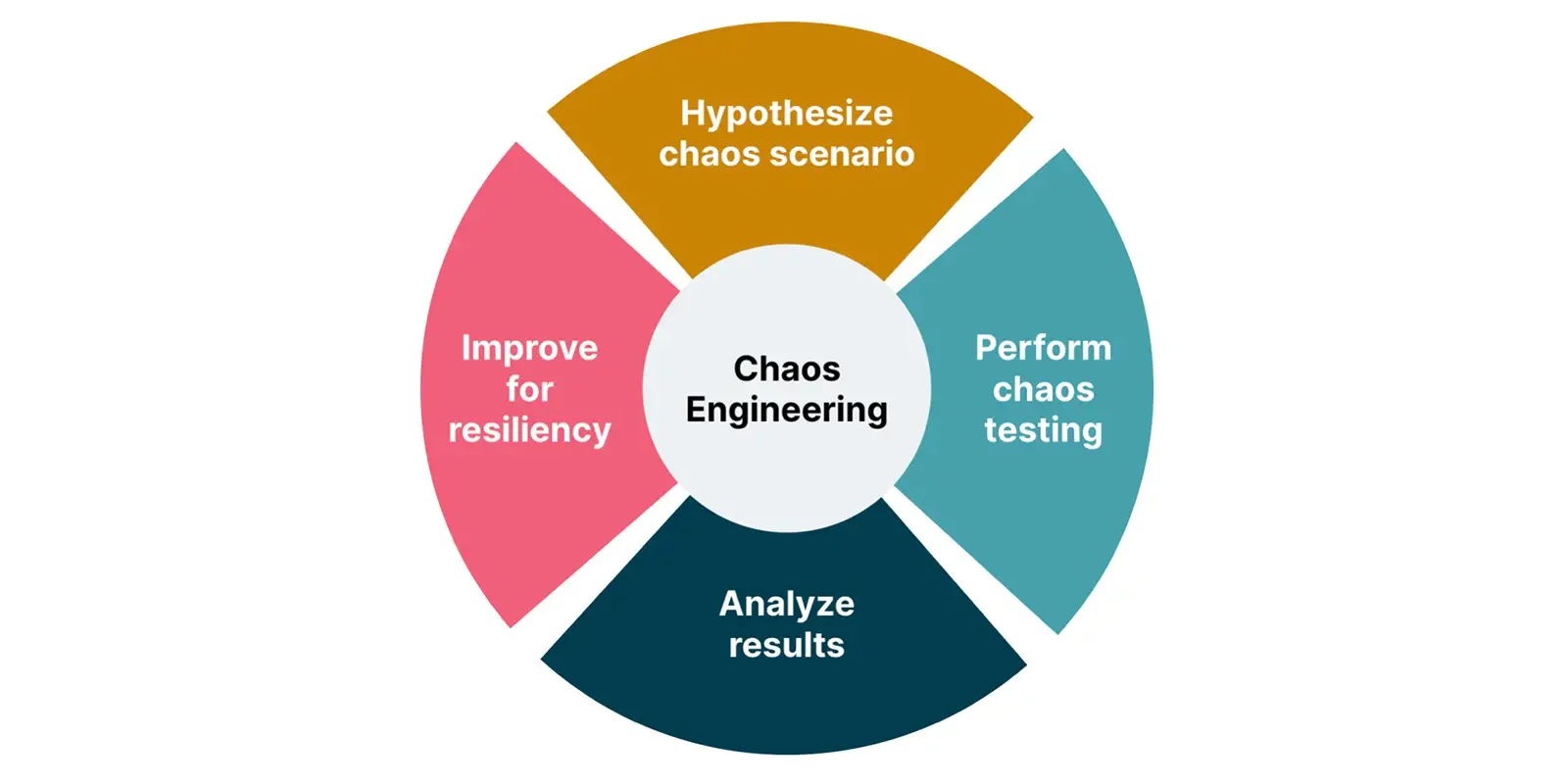

The ‘How’: A Practical Framework for Chaos Experiments

A successful chaos experiment is not a random act of destruction. It’s a carefully planned and executed scientific process. Let’s break it down into four actionable steps.

Step 1: Define Your Steady State

You can’t know if you’ve broken something if you don’t know what “working” looks like. Before you inject any failure, you must have solid observability in place. Your steady state should be defined by key business and system metrics, often referred to as Service Level Indicators (SLIs).

Examples of good steady-state metrics:

- Throughput: The system processes at least 1,000 requests per second.

- Error Rate: The percentage of 5xx server errors on the API gateway is below 0.1%.

- Latency: The 99th percentile (p99) latency for the `GET /products` endpoint is under 150ms.

- Business Metric: The rate of successful checkout completions remains above 99.5%.

These metrics should be visible on a dashboard (e.g., using Grafana, Datadog, or New Relic) so you can monitor them in real-time during an experiment.

Step 2: Formulate a Hypothesis

Your hypothesis frames the experiment. It states what you believe will happen and why. This forces you to think critically about your system’s resiliency mechanisms.

A strong hypothesis follows this structure: “We believe that if we [inject failure X], then [steady-state metric Y] will remain unchanged because [resiliency mechanism Z] will handle it.”

Example Hypothesis:

“We hypothesize that terminating a random pod in our stateless `user-profile-service` deployment will not increase p99 API latency above our 200ms threshold. This is because the Kubernetes ReplicaSet will immediately begin rescheduling a new pod, and the Service’s load balancer will automatically route traffic to the remaining healthy pods.”

Step 3: Design and Run the Experiment

This is where you inject the failure. The single most important concept here is blast radius—the potential scope of impact if your hypothesis is wrong. Always start with the smallest possible blast radius.

- Start in Pre-Production: Run your first experiments in a robust staging environment that closely mirrors production.

- Limit the Scope: When moving to production, target a tiny fraction of the system. For instance:

- One specific host or container instance.

- Traffic from internal employees only.

- A single availability zone during a low-traffic period.

- A small percentage of traffic in a canary deployment.

- Have an Emergency Stop: Always have a clear, immediate way to halt the experiment and revert the system to its normal state if things go wrong. Most chaos engineering tools have this built-in.

- Communicate Clearly: Announce when and where you are running an experiment. This prevents on-call engineers from panicking and wasting time on a “phantom” incident. A dedicated Slack channel is great for this.

Running a “GameDay”—a dedicated, scheduled event where teams come together to run chaos experiments—is an excellent way to start. It creates a safe, collaborative environment focused on learning.

Step 4: Verify and Learn

After the experiment concludes, analyze the results. Compare the metrics from your experimental group to your control group (or the baseline steady state).

- If the hypothesis was validated: Congratulations! You’ve just gained real, empirical evidence that your resiliency mechanism works as expected. Your confidence in the system has increased.

- If the hypothesis was disproven: This is the most valuable outcome! The steady state was impacted. Now, the real work begins. Conduct a root cause analysis to understand why the system failed. Did a retry mechanism have an improper backoff? Was a timeout configured too aggressively? Did a cache fail to evict stale data?

The output of this analysis should be a set of actionable tickets for your backlog. Fix the vulnerability, and then—critically—rerun the experiment to prove that your fix works. This iterative loop is what drives continuous improvement in reliability.

The ‘What’: Tools of the Trade

The Chaos Engineering ecosystem has matured significantly, offering a range of tools from open-source frameworks to full-fledged enterprise platforms.

Chaos Monkey and the Simian Army

The tool that started it all. Developed at Netflix, Chaos Monkey works by randomly terminating virtual machine instances and containers within a production environment. Its purpose is simple but profound: it forces engineers to build services that can tolerate instance failure. It is part of a larger suite called the Simian Army, which includes tools for injecting other types of failures like latency and network issues.

Gremlin: Chaos-as-a-Service

Gremlin is a leading commercial Chaos Engineering platform. It provides a “Failure-as-a-Service” model with a user-friendly UI and a robust API. Key features include:

- A large library of “attacks”: Inject failures at the resource level (CPU, memory, disk I/O), network level (latency, packet loss, DNS blackholing), and state level (shutting down hosts, killing processes).

- Fine-grained targeting: Precisely target specific hosts, containers, or applications using tags.

- Built-in safety mechanisms: Automatically halt experiments if the system deviates too far from its steady state.

- GameDay features: Tools for planning, executing, and documenting GameDay exercises.

LitmusChaos: Open-Source and Kubernetes-Native

For teams heavily invested in Kubernetes, LitmusChaos is a fantastic open-source option. A CNCF (Cloud Native Computing Foundation) Sandbox project, it’s designed to be completely Kubernetes-native.

- Chaos via CRDs: You define experiments declaratively using Kubernetes Custom Resource Definitions (CRDs) like `ChaosEngine` and `ChaosExperiment`. This allows you to manage chaos tests just like any other Kubernetes manifest.

- ChaosHub: A central hub of pre-defined chaos experiments that you can easily install and run in your cluster.

- Extensible and Pluggable: You can contribute your own experiments and integrate Litmus into GitOps workflows with tools like Argo CD or Flux.

Here’s a simple example of a LitmusChaos experiment that randomly kills a pod belonging to an Nginx deployment:

# chaos-experiment.yaml

apiVersion: litmuschaos.io/v1alpha1

kind: ChaosEngine

metadata:

name: nginx-chaos

namespace: litmus

spec:

# It can be active or stop

engineState: 'active'

appinfo:

appns: 'default'

applabel: 'app=nginx'

appkind: 'deployment'

chaosServiceAccount: litmus-admin

experiments:

- name: pod-delete

spec:

components:

env:

# How many pods to kill

- name: TOTAL_CHAOS_DURATION

value: '30' # in seconds

# The specific namespace of the application

- name: APP_NAMESPACE

value: 'default'

# The label of the application pod

- name: APP_LABEL

value: 'app=nginx'

# The kind of application

- name: APP_KIND

value: 'deployment'

Chaos in Production: Best Practices and Cultural Shift

The idea of running chaos experiments in production can be scary, but it is where the most valuable insights are found. The key is to approach it with discipline and a focus on safety.

Start Small and Automate

No one should start by running a massive, system-wide experiment in production. The path to production chaos is gradual:

- Start in dev/staging: Get familiar with the tools and processes in a safe environment.

- Run your first production experiment during a GameDay: This ensures all hands are on deck, and the experiment is well-planned and observed.

- Minimize the blast radius: Use the techniques discussed earlier (targeting a single host, internal users, etc.).

- Automate for continuous verification: The ultimate goal is to integrate chaos experiments into your CI/CD pipeline. For example, after a successful canary deployment, automatically run a small-scale latency injection experiment against the new version. This transforms chaos from a one-off event into a continuous validation of your system’s resilience.

GameDays and Blameless Postmortems

GameDays are critical for building confidence and muscle memory. They are not just for SREs; they should involve developers, product managers, and anyone involved in the service. It’s a team sport.

When an experiment reveals a weakness, the follow-up is just as important. A blameless postmortem culture is an absolute prerequisite for successful Chaos Engineering.

“We are not looking for a person to blame; we are looking for a systemic cause. The goal is to understand how our system, processes, and assumptions failed, not who failed.”

This psychological safety encourages curiosity and honesty, which are essential for uncovering deep, systemic issues.

Building a Culture of Resilience

Ultimately, Chaos Engineering is more than a set of tools or a process; it’s a cultural shift. It moves the organization from a reactive “break-fix” model to a proactive, preventative one.

- It distributes the responsibility for reliability. When developers are involved in chaos experiments on their own services, they start thinking about failure modes—like network partitions and dependency timeouts—during the initial design and coding phases.

- It replaces fear of failure with curiosity. Instead of asking “What if this service fails?”, teams start asking “What happens when this service fails? Let’s find out.”

- It builds data-driven confidence. You no longer just *hope* your failover mechanism works; you *know* it works because you’ve tested it repeatedly in the real world.

Conclusion: Build Confidence, Not Chaos

In the complex, ever-changing world of cloud-native systems, failure is not an “if,” but a “when.” We can either wait for it to happen at the most inconvenient time, or we can choose to initiate it on our own terms, in a controlled and observable way. Chaos Engineering provides the framework to do just that.

It’s a journey that begins with a simple question: “What is the most important promise our system makes to our users?” From there, you define your steady state, form a hypothesis about its resilience, and design a small, safe experiment to test it. The insights you gain from that first experiment—whether it succeeds or fails—will be invaluable, setting you on a path not to create more chaos, but to build unshakeable confidence in the face of it.