In the rapidly evolving landscape of distributed systems, microservices, and cloud-native architectures, the terms “observability” and “monitoring” are often used interchangeably, leading to confusion and, more critically, to systems that are difficult to understand and troubleshoot. For Site Reliability Engineers, Software Engineers, and Architects, understanding the nuanced yet fundamental differences between these concepts is not merely an academic exercise; it is crucial for building resilient, high-performing, and maintainable software systems. This article aims to clarify this distinction, delve into the core components of modern observability, and provide practical guidance on how to build systems that are truly observable.

Monitoring: The Traditional Lens

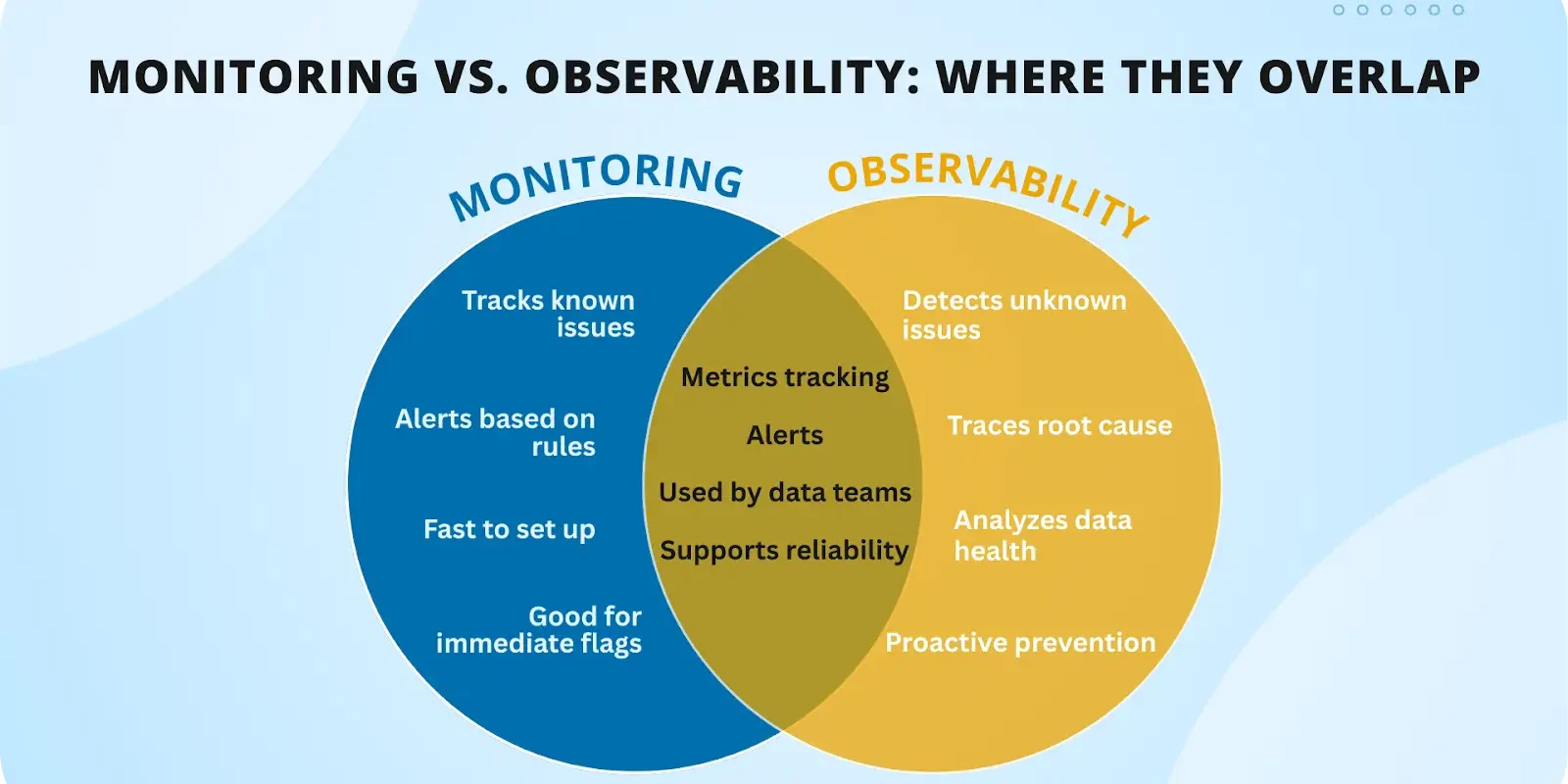

Monitoring is the practice of collecting, processing, aggregating, and displaying quantitative data about a system to track its health and performance. Historically, monitoring systems have focused on answering predefined questions about the health of known components. Think of it as keeping a watchful eye on a set of predetermined metrics or logs to ensure that everything is operating within expected parameters.

Key Characteristics of Monitoring

- Reactive: Monitoring typically alerts you when something is already wrong, or about to go wrong, based on pre-established thresholds.

- Focus on Known-Unknowns: It answers questions like “Is the CPU utilization too high?”, “Is the database query latency above 200ms?”, or “Are there more than 500 errors per minute?”. These are issues you anticipate and have defined metrics or log patterns for.

- External Perspective (Black-Box): Often, monitoring tools observe a system from the outside, checking if an endpoint responds, if a service is running, or if resource usage is within limits, without necessarily understanding the internal logic of the application.

- Dashboards and Alerts: Monitoring primarily relies on dashboards that visualize key metrics and alerts that trigger when those metrics cross a threshold.

Limitations of Monitoring in Modern Systems

While essential, traditional monitoring falls short in the face of modern system complexity:

- Microservices and Distributed Architectures: A single user request might traverse dozens of services, databases, message queues, and external APIs. A simple “service is down” alert doesn’t tell you which part of the complex interaction failed, or why.

- Ephemerality: Containers, serverless functions, and auto-scaling groups mean that instances are short-lived. Static monitoring configurations struggle to keep up.

- High Change Velocity: CI/CD pipelines lead to frequent deployments. New features or code changes can introduce unforeseen failure modes that no pre-configured monitor could anticipate.

- Lack of Context: A metric indicating high latency is useful, but it doesn’t provide the granular context needed to debug the root cause, such as the specific user request, the code path, or the external dependency that slowed it down.

Monitoring tells you what is happening. It’s a critical first line of defense, but it rarely tells you why.

Observability: A Paradigm Shift

Observability, a concept borrowed from control theory, refers to how well the internal states of a system can be inferred from its external outputs. In the context of software, an observable system allows you to ask arbitrary questions about its internal state, even for issues you didn’t anticipate. It’s about empowering engineers to understand the system’s behavior deeply and debug issues, especially the “unknown-unknowns”—problems you didn’t even know existed or couldn’t have predicted.

Key Characteristics of Observability

- Proactive and Exploratory: Observability is about having the data and tools to explore, hypothesize, and debug issues dynamically. You don’t just react to alerts; you investigate the underlying causes.

- Focus on Unknown-Unknowns: It equips you to answer questions like “Why did that specific user request fail only for users in Region X, at that exact time, when all service health checks were green?”, or “What specific microservice dependency is causing the intermittent 5xx errors that only appear under certain load conditions?”.

- Internal Perspective (White-Box): Observability requires instrumentation within the application code to expose rich, contextual data about its internal operations, state transitions, and interactions.

- Data-Driven and Context-Rich: It relies on collecting vast amounts of high-fidelity, highly contextual telemetry data (metrics, logs, traces) that can be correlated and analyzed to reconstruct the system’s behavior.

Why Observability Matters Now More Than Ever

- Complexity Management: It provides the necessary visibility into increasingly complex, distributed systems, enabling engineers to navigate the intricate web of dependencies.

- Faster Mean Time To Resolution (MTTR): By providing deep context, observability significantly reduces the time it takes to identify, diagnose, and resolve production incidents.

- Shift-Left Debugging: Encourages developers to build observability into their code from the outset, leading to more robust systems from day one.

- SRE Culture and Blameless Post-Mortems: Rich telemetry data fosters a culture of learning from incidents by providing objective evidence and helping teams understand systemic failures without finger-pointing.

“Monitoring is a subset of observability. You can have monitoring without observability, but you cannot have true observability without monitoring.”

The Three Pillars of Observability

Observability is built upon three fundamental types of telemetry data: Metrics, Logs, and Traces. While each serves a distinct purpose, their true power emerges when they are correlated and used together to provide a holistic view of the system.

Metrics

Metrics are numerical measurements representing data points over time. They are aggregated, indexed, and typically used to describe the health and performance of a system or service at a high level. Metrics are excellent for dashboards, alerting, and identifying trends or anomalies quickly.

- Characteristics: Aggregatable, low cardinality (typically), time-series data.

- Types:

- Counters: Monotonically increasing values (e.g., total requests, error count).

- Gauges: Current values that can go up or down (e.g., CPU utilization, memory usage, queue size).

- Histograms/Summaries: Quantiles and distributions of observations (e.g., request latency P99, P95).

- Use Cases: Service health checks, capacity planning, anomaly detection, real-time alerts.

Example: Collecting a simple metric with a Prometheus client (conceptual)

# Python using Prometheus client library

from prometheus_client import Counter, Gauge, Histogram, generate_latest

# Define a counter for total requests

REQUEST_COUNT = Counter('my_service_requests_total', 'Total requests to my service.')

# Define a gauge for current active connections

ACTIVE_CONNECTIONS = Gauge('my_service_active_connections', 'Current active connections.')

# Define a histogram for request durations

REQUEST_DURATION_SECONDS = Histogram(

'my_service_request_duration_seconds',

'Request duration in seconds.',

buckets=[0.1, 0.2, 0.5, 1.0, 2.0, 5.0]

)

def process_request():

REQUEST_COUNT.inc() # Increment the counter

with REQUEST_DURATION_SECONDS.time(): # Measure duration

# Simulate processing a request

import time

time.sleep(0.3)

# Potentially update gauge if connections change

# In a web server endpoint:

# @app.route('/metrics')

# def metrics():

# return generate_latest() # Exposes Prometheus format

Logs

Logs are discrete, immutable, timestamped records of events that occur within an application or system. They provide detailed context about specific events, such as an error occurring, a user action, or a state change. Modern logging emphasizes structured logging (e.g., JSON format) to make logs machine-readable and easily searchable.

- Characteristics: Unstructured or structured, high cardinality, verbose.

- Importance of Structured Logging: Allows for easier parsing, filtering, and analysis by logging tools. Key-value pairs provide rich context.

- Use Cases: Detailed debugging, forensic analysis, auditing, understanding specific event sequences.

Example: Structured logging in Python

import logging

import json

# Configure logging to output JSON (simplified example)

class JsonFormatter(logging.Formatter):

def format(self, record):

log_record = {

"timestamp": self.formatTime(record, self.datefmt),

"level": record.levelname,

"message": record.getMessage(),

"service": "user-service",

"module": record.name,

"line": record.lineno,

"trace_id": getattr(record, 'trace_id', 'N/A'), # Custom attribute for trace_id

"user_id": getattr(record, 'user_id', 'N/A'), # Custom attribute for user_id

"request_id": getattr(record, 'request_id', 'N/A'),

"data": getattr(record, 'data', {}) # Additional structured data

}

return json.dumps(log_record)

logger = logging.getLogger(__name__)

logger.setLevel(logging.INFO)

handler = logging.StreamHandler()

handler.setFormatter(JsonFormatter())

logger.addHandler(handler)

# Example usage:

logger.info(

"User logged in successfully",

extra={'user_id': 'alice123', 'ip_address': '192.168.1.1', 'trace_id': 'abc-123'}

)

try:

result = 10 / 0

except ZeroDivisionError as e:

logger.error(

"Division by zero error",

exc_info=True,

extra={'user_id': 'bob456', 'operation': 'calculate_ratio', 'trace_id': 'def-456'}

)

Traces

Traces (or distributed traces) represent the end-to-end journey of a single request or operation as it propagates through a distributed system. A trace is composed of multiple “spans,” where each span represents a logical unit of work (e.g., a function call, a service request, a database query) within that journey. Traces are crucial for understanding the causal relationships and performance characteristics across service boundaries.

- Characteristics: High cardinality, contextual, hierarchical.

- Spans: Each span has a name, start/end time, attributes (key-value pairs describing the operation), and a parent-child relationship with other spans.

- Context Propagation: The key to distributed tracing is propagating a unique trace ID and parent span ID across service calls (e.g., via HTTP headers or message queue headers).

- Use Cases: Root cause analysis in distributed systems, performance bottleneck identification, service dependency mapping, profiling.

Example: Conceptual trace propagation

// Conceptual flow across services with trace context

// Service A (initiator)

function handleRequest(req) {

traceId = generateUniqueId();

spanId = generateUniqueId();

// Start root span for Service A

spanA = startSpan("ServiceA.handleRequest", { traceId: traceId, spanId: spanId });

// Inject trace context into outgoing request headers

headers = {

"x-trace-id": traceId,

"x-parent-span-id": spanA.spanId

};

// Call Service B

responseB = makeHttpRequest("http://service-b/process", headers);

// End root span

endSpan(spanA);

}

// Service B (downstream)

function processRequest(req) {

// Extract trace context from incoming request headers

traceId = req.headers["x-trace-id"];

parentSpanId = req.headers["x-parent-span-id"];

// Start child span for Service B

spanB = startSpan("ServiceB.processRequest", { traceId: traceId, parentSpanId: parentSpanId });

// Perform work, maybe call another service or a database

// ...

// End child span

endSpan(spanB);

}

The synergy between these three pillars is vital: metrics alert you to a problem, traces help you find the failing service and code path, and logs provide the granular details needed for a full diagnosis.

Building Observable Systems from the Ground Up

True observability isn’t an afterthought; it’s a design principle. It requires a fundamental shift in how applications are developed and maintained, moving instrumentation from an operational concern to a core development task.

Designing for Observability

Incorporating observability starts at the architectural design phase:

- Instrumentation as a First-Class Citizen: Treat telemetry data generation as important as business logic. Embed instrumentation directly into your code.

- Semantic Logging: Don’t just log strings. Log structured data (JSON preferred) with meaningful attributes (e.g.,

user_id,request_id,service_name) that can be easily queried and correlated. - Context Propagation: Design your inter-service communication to always carry trace context (

trace_id,span_id). This is essential for distributed tracing. - Exposing Internal State: Create health endpoints and metrics endpoints that provide valuable insights into your service’s operational status and performance.

Instrumentation Best Practices

- Automate Where Possible: Leverage frameworks and libraries that offer automatic instrumentation for common components (e.g., web frameworks, database drivers, message queues).

- Standardize Naming Conventions: Use consistent naming for metrics, log attributes, and span names across all services. This reduces cognitive load and improves queryability. OpenTelemetry provides excellent semantic conventions.

- Enrich Telemetry: Add business-relevant attributes to your telemetry data. For example, in an e-commerce system, add

order_id,customer_id, orproduct_skuto logs and traces related to order processing. - Consider Cardinality: Be mindful of metric cardinality. While high cardinality is acceptable for logs and traces (within reason), excessive unique labels for metrics can strain your monitoring system.

- Performance Impact: Instrumentation should be lightweight and have minimal performance overhead. Choose libraries and tools designed for this purpose.

“Observability is not something you buy, it’s something you build into your systems from the very beginning. It’s a mindset shift towards understanding the internal state of your services at runtime.”

OpenTelemetry and Modern Observability Stacks

The proliferation of observability tools and vendors led to fragmentation and vendor lock-in. Engineers often had to rewrite instrumentation when switching providers or integrating different systems. OpenTelemetry (Otel) emerged to address these challenges.

OpenTelemetry: The Open Standard

OpenTelemetry is a vendor-agnostic set of APIs, SDKs, and tools designed to standardize the generation, collection, and export of telemetry data (metrics, logs, and traces). It is a Cloud Native Computing Foundation (CNCF) project and has become the de-facto standard for instrumenting cloud-native applications.

- Unified API: Provides a single set of APIs for all three pillars of observability across various programming languages.

- Vendor Neutrality: Data is collected in a standardized format and can be exported to any OpenTelemetry-compatible backend, whether open-source (Prometheus, Grafana, Jaeger) or commercial (Datadog, New Relic, Honeycomb, Lightstep).

- Reduced Lock-in: By instrumenting with OpenTelemetry, you decouple your application from specific observability vendors, allowing greater flexibility in choosing and switching tools.

- OpenTelemetry Collector: A powerful, vendor-agnostic proxy that receives, processes, and exports telemetry data. It can filter, transform, and aggregate data before sending it to one or more backends.

Example: Conceptual OpenTelemetry Python instrumentation

# Python using OpenTelemetry SDK

from opentelemetry import trace

from opentelemetry.sdk.trace import TracerProvider

from opentelemetry.sdk.trace.export import ConsoleSpanExporter, SimpleSpanProcessor

from opentelemetry.semconv.trace import SpanAttributes

# Set up a tracer provider

provider = TracerProvider()

processor = SimpleSpanProcessor(ConsoleSpanExporter()) # For demonstration, prints to console

provider.add_span_processor(processor)

trace.set_tracer_provider(provider)

# Get a tracer for your application

tracer = trace.get_tracer(__name__)

def process_order(order_id: str, user_id: str):

# Start a new trace or continue an existing one

with tracer.start_as_current_span("process_order", attributes={

SpanAttributes.HTTP_METHOD: "POST", # Semantic conventions

"app.order_id": order_id,

"app.user_id": user_id

}) as parent_span:

# Simulate some work

import time

time.sleep(0.1)

# Start a child span for a database operation

with tracer.start_as_current_span("database_lookup", attributes={

SpanAttributes.DB_SYSTEM: "postgresql",

SpanAttributes.DB_STATEMENT: "SELECT * FROM orders WHERE id = %s"

}) as db_span:

time.sleep(0.05)

# Add event to span

db_span.add_event("database_query_complete", {"rows_returned": 1})

# Simulate calling an external service

with tracer.start_as_current_span("call_payment_gateway") as payment_span:

payment_span.set_attribute("payment.gateway", "stripe")

time.sleep(0.08)

payment_span.set_status(trace.Status(trace.StatusCode.OK)) # Set span status

parent_span.set_attribute("order.status", "processed")

parent_span.add_event("order_processed_event")

# Example usage

process_order("ORDER-XYZ-123", "USER-A-456")

Modern Observability Stacks

A comprehensive observability stack typically combines several tools, often leveraging OpenTelemetry for data collection:

- Data Collection: OpenTelemetry Collector, Fluent Bit (for logs), Prometheus Node Exporter.

- Metrics Storage & Querying: Prometheus, M3DB, VictoriaMetrics, Grafana Mimir.

- Logs Storage & Querying: Loki, Elasticsearch (ELK Stack), Splunk, DataDog Logs.

- Traces Storage & Visualization: Jaeger, Tempo, Zipkin, Lightstep.

- Visualization & Dashboards: Grafana (for metrics and logs), Kibana (for logs).

- Alerting: Alertmanager (with Prometheus), integrated platforms.

Commercial full-stack solutions like Datadog, New Relic, and Honeycomb offer integrated platforms that handle all three pillars, often with advanced AI/ML capabilities for anomaly detection and root cause analysis. Many of these platforms also support OpenTelemetry for data ingestion, allowing you to choose the best of both worlds: open-standard instrumentation with powerful commercial backends.

Real-World Use Cases and Best Practices

Use Cases

- Debugging a Latency Spike:

- Monitoring: An alert fires because the p99 latency for Service X has exceeded 1 second.

- Observability: You navigate to Service X’s dashboard (metrics) and see the spike. You then dive into traces for requests during that period, filtering by high latency. A trace reveals that Service X is spending an unusual amount of time waiting for a response from Service Y. You click on Service Y’s span in the trace and see that it’s spending most of its time on a specific database query. Clicking into the database span, you find correlated logs showing slow query warnings and potentially an index missing error.

- Proactive Anomaly Detection:

- Monitoring: You have thresholds for known error rates.

- Observability: Using anomaly detection on metrics, you notice a subtle, sustained increase in a specific HTTP status code (e.g., 404s) that’s below your alerting threshold but indicative of a problem. You then use traces to see which upstream services are generating these 404s and use logs to understand the specific requests causing them, discovering an unhandled edge case in a new deployment.

- Understanding User Journey Performance:

- Monitoring: Your frontend APM shows the “Login” page is slow.

- Observability: You trace a complete user login flow from the frontend through authentication services, user profile services, and token generation. The trace exposes that the latency isn’t in any single service but in the cumulative overhead of several sequential internal calls and external identity provider lookups, which was previously masked by individual service health.

Best Practices

- Start Simple, Iterate Often: Don’t try to instrument everything at once. Start with critical services and key operations, then expand.

- Educate Your Team: Ensure developers, SREs, and operations teams understand the difference and how to effectively use observability tools. Foster a culture of instrumentation.

- Integrate into CI/CD: Make instrumentation review part of your code review process. Automatically deploy new services with default instrumentation.

- Define SLOs/SLIs with Observability Data: Leverage your rich telemetry to define meaningful Service Level Indicators (SLIs) and Service Level Objectives (SLOs) that accurately reflect user experience.

- Correlate Everything: Ensure your metrics, logs, and traces are linked by common identifiers (like

trace_id,request_id,user_id). This is the key to unified analysis. - Be Opinionated: Standardize on tools and conventions. While OpenTelemetry offers flexibility, establish internal guidelines for how telemetry should be generated and consumed.

- Don’t Just Collect, Analyze: Raw data is useless without the ability to query, visualize, and extract insights. Invest in powerful analysis tools and train your teams to use them effectively.

Conclusion

The distinction between observability and monitoring is more than semantic; it represents a fundamental shift in how we approach understanding and managing complex software systems. Monitoring answers “what” is happening, focusing on predefined conditions and known-unknowns. Observability, on the other hand, empowers us to answer “why” it’s happening, enabling engineers to explore and debug unanticipated issues, the unknown-unknowns, by providing deep, contextual insight into the system’s internal state.

Embracing the three pillars—metrics, logs, and traces—and adopting standards like OpenTelemetry are crucial steps toward building truly observable systems. This isn’t just about adding more tools; it’s about embedding a telemetry-first mindset into your development lifecycle, fostering a culture of curiosity and continuous learning, and ultimately building more resilient, high-performing, and user-centric applications. As systems grow in complexity, the ability to effectively observe them becomes less of a luxury and more of a necessity for survival in the modern software landscape.